The Physical Characteristics of Conventional Disks

The hard disk has one or more metal platters coated top and bottom with a magnetic material similar to the coating on a VCR magnetic tape. Information is recorded onto bands of the disk surface that form concentric circles. The circle closest to the outside is much bigger than the circle closest to the center. If there are four metal platters on a disk, then information is recorded on the top and bottom side of each platter and there are eight identical sized circles of recorded information at each distance out from the center.

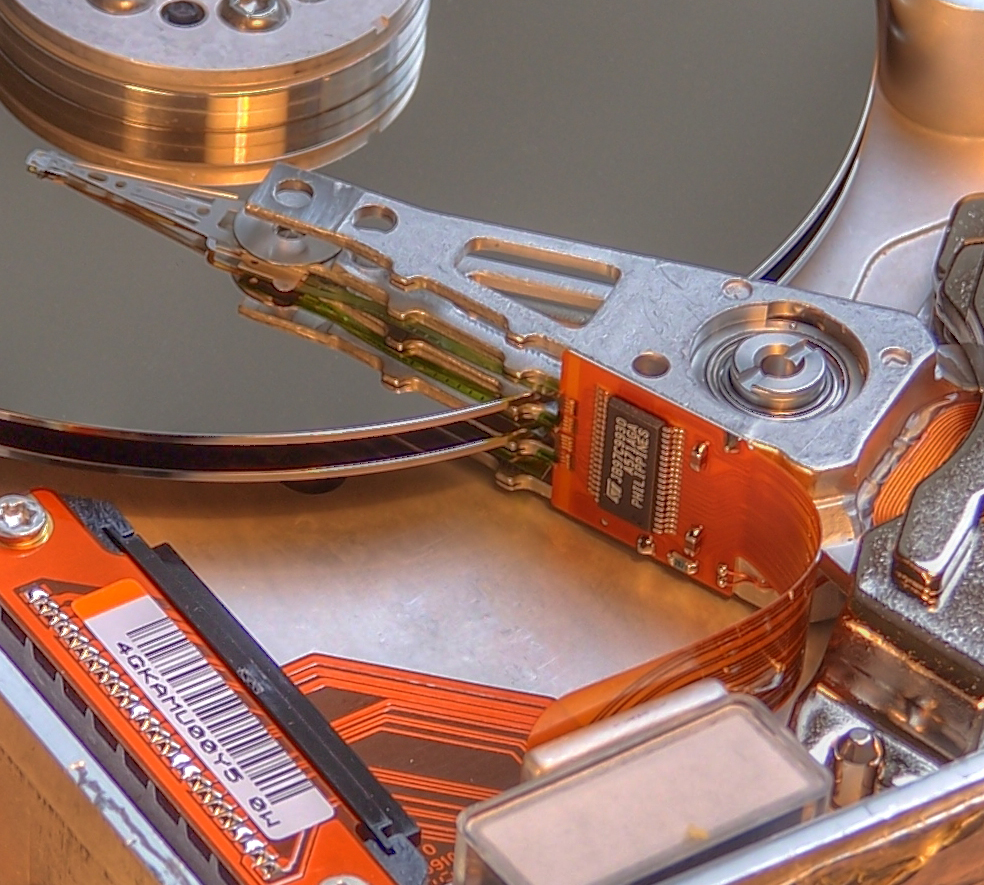

There is a separate magnetic read/write head for each disk surface mounted on an “arm” that moves in and out across the surface of the disk under the control of an electric motor.

If the next piece of data you need to read from the disk is located at a different arm position, the arm must move. This is called a seek. The speed of the seek depends on the power of the electric motor that moves the arms. Laptop disks tend to have less powerful motors to save battery power. Desktop drives take about 9 milliseconds (0.009 seconds) to move to a random location on the disk. Server disks, which have to do a lot of seek activity to support databases, tend to use a more powerful motor that can seek in half the time of a desktop drive. However, the more powerful the seek, the more noise the disk makes when seeking. Putting a server disk in a home computer is expensive, noisy, and probably unnecessary. Today, those who want to improve seek performance for a laptop or desktop computer will probably purchase a Solid State Disk (SSD) and get rid of the seek entirely.

Once the arm is in position, it is necessary to wait for the data you are interested in to rotate around on the surface of the disk until the start of the data is positioned under the arm location. This is called the rotation latency. On average, this delay will be one half the time it takes for the disk to rotate completely. For example, on a desktop disk with a 7200 RPM rotation speed, this is half of 60/7200 seconds or 0.0042 seconds. Server disks rotate at 10,000 or 15,000 RPM. So it is common for the latency to be approximately half the seek time.

Data is recorded in sectors that record a certain amount of data. The first PC hard disk had a sector size of 512 bytes, and most disks today still use that sector size. At the raw hardware level, you cannot read or write part of a sector, so the smallest amount of data you can transfer off the disk is a block of 512 bytes. To change a single byte in a file, you have to read the 512 byte sector containing it, change the byte, and then rewrite the sector back to disk.

The 512 byte sector size made sense on the first 10 megabyte sized PC hard disk, but it has been too small for at least a decade. Operating systems use a 4096 (4K) page size that controls their entire memory management, buffering, and caching system. File systems have a 4K or larger basic allocation unit. The computer industry has agreed to move to a 4096 byte sector size over time. There is an official date of Jan 2011, but some disks are available today and older disk models will still be available after that date. Windows 7 supports a 4096 byte sector, but Windows XP requires a driver update.

The obvious problem is that if you use a 32 bit sector number to address 512 byte sectors, you cannot address more than 2 terrabytes of data. So you have to go to a larger sector size, a larger sector number, or both. Since you can combine more than one disk in a RAID configuration and easily exceed the 2 terrabyte limit, there are disk driver interfaces that already support larger disk sizes, particularly on server computers. A larger sector size is one of the things that can be done to extend the lifetime of the simple driver interfaces.

Historically, the data stored on one of the concentric circles on a disk surface is called a “track”. Each track has a starting point where the first sector on that track is written, and then additional sectors are written to the track until it is full. If you start anywhere in a track you can read sectors until you hit the end of the track. After that, the next sector in the file will be on some other track.

As you move out from the center of the disk the size of the circle gets larger. You can fit more sectors into the circle at the outsize edge of the disk than you can on the innermost circle. Only the electronics on the disk itself keep track of the number of sectors available at each position of the read/write arm. Operating systems today tend to identify data by a sector number and they leave it up to the disk electronics to figure out where to move the arm and where during the rotation to read the data.

Green (Power Saving)

If you are making a large number of random reads and writes to different parts of the disk, then you need the disk to seek and rotate at top speed. That describes a Server disk, but desktop and laptop disks are often idle for long periods of time. They become busy in bursts.

An idle disk is still rotating, so the motor is using up electricity and, on a laptop, using up battery. If the disk remains idle for a long period the power management feature of the operating system can order it to power down and stop spinning. However, during the in between period where the disk is occasionally but not heavily used, you can give up some performance in exchange for power savings.

Most laptop disks rotate at 5400 RPM instead of the more common 7200 RPM for desktop disks. Some “Green” technology disks have a variable rotation speed. During periods of low use (which is most of the time) they slow down to 5400 RPM. During a burst of activity, however, the disk begins to use more energy and speeds up the rotation to 7200 RPM.

The same trick can be used with seek time. A smart Green drive can calculate how long it will take the arm to move to the location of the data. It can also calculate where the data will be on the disk surface when the arm gets to the right location.

If you know that it will take you at least 4 minutes to get to the bus stop, and the next bus will arrive at the stop in 2 minutes, and the next bus after that will be 15 minutes later, then it makes no sense for you to run and get all overheated. Take your time, walk slowly, window shop, talk on the cell phone. A Green disk controller can make the same decision about moving the arm based on the current rotation position of the data. If when the arm gets there the data will be out of position and will require 3/4 of a rotation to come back around under the arm, then it makes no sense to use the maximum amount of energy to move the arm as fast as possible. The controller uses less energy to move the arm slower, just so long as the arm gets into position before the data.

Solid State Disks

An SSD uses “flash” memory that does not lose its contents when the power is turned off. It is a version of the flash memory device that you hang on a key chain and plug into a USB port, but in an SSD the memory is much faster. Because there are no moving parts, data in an SSD disk can be accessed without seek delay or rotational latency. Vendors quote a sustained transfer rate of 200 megabytes per second when reading and 100 megabytes per second writing sequential files. However, if you store large sequential files on an SSD you are wasting money. Rotating disks perform just as well and cost a lot less. SSDs should be used for small random access, such as happens when the operating system boots off the system disk.

In normal (SDRAM) computer memory you can read or write individual bytes. On a regular hard drive you can read or write 512 byte sectors. However, flash memory works differently. In flash memory you can erase (set to all 0’s) a block of what is typically a half-megabyte of data. After the block is erased, you can individually write the 1 bits. Once a bit has been set to 1, however, you cannot set it back to 0. Not the bit, not the byte, not the sector. You can only erase to 0 the entire half megabyte block.

The problem is that operating system and programs are not written to operate in half megabyte blocks. At most, a program might be optimized to rewrite 512 byte sectors or 4096 byte pages. So in order to operate at maximum efficiency, the device driver in the OS and the controller chip on the SSD have to translate and optimize the disk operations performed by the programs.

There is no particular reason why data has to be stored inside the SSD memory in the same order it would be stored on a disk surface. The disk controller can translate the disk address generated by the OS into a memory address in the flash chips. So one performance trick is to have more memory inside the SSD than the size of the disk that is exposed to the computer. The extra memory can hold a pool of previously erased half-meg blocks. When the computer tries to rewrite a sector on the “disk”, the controller can copy the entire half megabyte around that sector to a previously erased block of data and then replace the new block in the “disk address” previously mapped to the prior data. The old block is then queued up to be erased when the disk has some idle time and will then be added to the hidden pool of erased blocks.

If you have a moderate amount of money to spend on a system, it makes sense to get a small primary or system disk to hold the OS and your most frequently used moderate sized applications. Then get one or more conventional rotating hard drives to hold bulk files and big applications. If you have a laptop, the SSD would be inside and the large rotating disks could be external and connected by e-SATA.

File Systems

If you look at a disk at the hardware level, it appears to be a device with numbered disk sectors sized either 512 or 4096 bytes. You send the disk a sector number, it finds the sector, and then you can read or write the sector data. Although you can use the entire disk as a single storage unit, it is more common to divide a disk up into a small number of separate areas called “partitions”. Each partition acts like a smaller disk.

To turn a disk/partition into a large number of directories and files, you need to format it with a file system. In Windows, the type of file system is NTFS. However, for the purpose of this discussion we only need to discuss the generic problems faced by all filesystems.

Although the disk may have 512 byte sectors, almost no modern file systems want to be bothered with anything that small. In almost every case, a file system will group units of 8 small sectors into a 4096 page or “allocation unit”. Optionally, a modern file system may even regard 4K as much too small an amount of data to worry about on a 1 or 2 terrabyte hard disk, and they may create a larger allocation unit (say 64K). What this means is that while you might write a file that contains only one byte of data, the smallest unit of disk space that the file system will assign to the file is a single allocation unit, whatever that may be.

When you format a partition, you create a set of sectors that contains something called the “directory” or “volume table of contents” or “master file table”. It contains three types of information:

- Free Space Inventory -There is an inventory of unused allocation units available for assignment either to create new files or to extend the size of existing files. In any rational file system, this structure will be optimized to be able to create new small files from the random scattered chunks of free space left over when old files are deleted, or to create new big files out of the continuous empty sectors from the large unallocated parts of the disk.

- File Location Table - An entry for each file on the disk (typically identified by a number) that lists the number addresses of the single allocation units or of large contiguous blocks of allocation units that have been assigned to hold the data of the file. There may also be attributes like the file access permissions.

- File Name Dictionary - A structure that holds the names of directories and files (sorted in alphabetic order and structured to be easily searched) that relates a file name to a numbered entry in the previous File Location Table.

All file systems have these three structures, though different systems give each different names. When the partition is formatted, all the allocation units are assigned to the Free Space Inventory and the file list and dictionary are empty. Now the computer goes to write the first file on disk. If it is copying a file from another disk or CD/DVD, it can determine from the input how big the file is going to be. It can then ask the file system to create a new file number in the File Location Table and assign to it a block of allocation units from the Free Space Inventory large enough to hold the data. Then the data is copied into these allocation units on disk. The file name is entered into the Dictionary, and points to the number ID of the file in the File Location Table.

Now you copy a bunch of files. Eventually you delete some file. When a file is deleted, the name is removed from the Dictionary, the entry is removed from the File Location Table, and the allocation units are returned to the Free Space Inventory.If the file is renamed, then the Dictionary simply needs to be changed. Because the directory tree is just part of the file name, “moving” a file from one directory to another directory in the same partition is simply another type of rename and does not involve copying the data.

What happens in the middle of one of these operations if the power fails or the computer crashes. There is a moment during file creation after allocation units have been removed from the Free Space Inventory and before they have been written to the File Location Table, and another moment during file deletion after allocation units have been removed from the File Location Table but before they have been written to the Free Space Inventory. At these points, if the system crashes then when it comes back up the allocation units may be lost.

One solution is to perform a comprehensive inventory and check of the three types of information. In Windows, this is called CHKDSK. The system starts by creating a table of all the allocation units in the partition. Then it reads through the Free Space Inventory and File Location Table to compare all those entries to each other and make sure that all allocation units are accounted for. In the previous cases, there will be allocation units that are in no table and they can be added to the Free Space Inventory. However, there can be system errors where the same allocation unit is in two places. If a unit is in both the Free Space Inventory and also apparently allocated to a file, then the free space entry can be deleted. The real problem is if the same allocation unit is listed as being assigned at the same time to two different files. Now one or both of the files is damaged, but there is no way to know for sure. This is the one type of error that cannot be automatically corrected.

However, rebuilding the entire partition control information is a slow process, and you do not want to do it every time the system crashes. So most modern file systems have a “journal” area. The file systems writes in the journal what it is about to do, then it does it, and then it marks the operation complete in the journal. After a crash, when the system comes up it looks in the journal to determine what operation was being performed, and cleans up that specific aborted operation.

There are also certain optimizations available. For example, when a file is deleted but the disk already has lots of free space, there is no need to actually destroy the old entry in the File Location Table. It can, instead, be marked as deleted but left in place. In computer jargon, it is “tombstoned” (marked in place as deceased) and becomes essentially an extension of the Free Space Inventory held in a File Location Table entry. One advantage of this technique is that an accidentally deleted file can be recovered (undeleted) by resurrecting the File Location Table entry (as long as the file system did not fill up and actually reassign the allocation units to some other file.

Archive (Zip) Files

An archive is a directory or directory tree that has been copied into a single file. Sometimes it is compressed. File types include *.zip, *.tar, *.cab and lots of other extensions.

An archive contains a directory that names each member file and points to to byte address in the parent archive file where that individual member file is located.

In Windows 7, the VHD file format previously associated with virtual machines is now supported by the operating system as a kind of super archive. Zip files only support the lowest common denominator allowed by all operating systems. Member files in a VHD can have all the attributes and features of NTFS, including Unicode file names (file names in Russian, Arabic, or Chinese characters) and individual permissions.

CD DVD Blu-Ray

A DVD is a high density CD, and a Blu-Ray disk is a higher density DVD. Collectively, these are referred to as “optical storage”.

There are two types of optical disks. When you buy an audio CD or movie DVD from Amazon, you get a “manufactured” optical disk because the data is put onto the disk as part of the manufacturing process. Frequently the data is represented by small “pits” in the actual disk surface. A “writable” disk (CD-R, DVD+R, BD-R) has a dye material added to the disk. Iniitially the material is transparent, but when the laser light is applied to heat it up it changes state and become opaque.

Optical disks have 2048 byte sectors, and unlike a hard drive where the sectors are written in concentric circles on the disk surface, the data in an optical disk forms a single continuous spiral from the center to the outside edge of the disk. Optical disks have a high density, but because the of laser and the spiral they have a very slow seek time. Because they are typically used to hold music, video, backup data, or program distribution, random access is not an important issue.

Unlike a hard drive, where the file system is designed for random access and update, an optical disk typically has a directory that is written to the disk after all the data has been written. There are rewritable versions of optical storage, but this application has largely been replaced today by either large cheap hard disks or flash USB drives. The (re)writable DVD media is not as convenient or cost competitive with these alternatives.

Hard Disk Sizes

3.5 inch - This is the size of all desktop disks and many server disks. The large disk holds more data, and capacities are available up to 2 terabytes (2000 gigabytes).

2.5 inch - A smaller disk surface holds less data, but it is lighter and requires less power to rotate. This is the typical disk size for all but the smallest, thinnest laptops (to save battery). Recently it has also become a common size for enterprise disks that typically do not require large capacities, but do require better performance and lower power drain.

1.8 inch - The small, thin laptops choose an even smaller disk with less capacity but even lower power use.

External Disks

You can put a 3.5 or 2.5 inch hard drive in a separate external case, or buy the disk and case together as a packaged product. Different models of external disk connect to the computer in one of three ways:

- USB 2.0 can transfer data at up to 40 megabytes per second, about half the native speed of the disk. Every computer has several of these connectors, and if you run out you can connect an external hub. This is a good choice for backup or casual use, but the lower performance means that you don’t want to use it for heavy applications.

- e-SATA is an external version of the same SATA cable that connects the hard drives inside your computer. It allows an external hard drive to run as fast as internal drives. When a laptop has an e-SATA connector (or when you add one by plugging an ExpressCard into the laptop, then an external 3.5 inch 7200 RPM disk would actually be faster than the built in 2.5 inch 5400 RPM disk.

- USB 3.0 is a new version of USB that is as fast as e-SATA. Unfortunately, few mainboards and almost no external disks support this option. When it becomes more common it will be a reasonable substitute, but in the short term you might use e-SATA instead.

The connectors in the back of a SATA disk were designed to push into a socket. The SATA system can be “hot pluggable”, which means the OS will detect when a disk is plugged into an SATA connection and can be told to shut the disk down and allow it to be removed. Therefore, the external case is not really required. For around $30 you can buy a bracket that mounts in one of the 5 1/2 inch bays on the front of a desktop computer, or else you can buy a standalone box that sits on your desk and connects to the computer with an e-SATA or USB cable. Slide a naked SATA disk into the slot and the system recognizes it. Tell the system to power it down, and you can remove it and replace it with a different disk.

A standard laptop 2.5 inch disk is designed to save battery, not to perform well. You can replace it with a SSD to get better performance, but now you cannot afford high capacity without breaking the bank. So an alternative is to connect the laptop to an external full speed 3.5 inch disk at home, in the office, or both.

Performance

You have now been given all the numbers, but you won’t appreciate what they mean until we run a few calculations.

Random Small Operations are Slow

Suppose you read some random small pieces of data scattered all over the disk. Each time you jump from one piece of data to the next, you have to wait for a seek to complete and then for the rotational latency. If you add the 9 millisecond average seek to the average latency (half of a single 7200 RPM rotation) you discover that a typical desktop hard drive can do about 75 random reads per second.

Large Sequential Operations are Fast

To get started, the disk has to seek to the right position and wait for the data to rotate around. Once in position, however, the disk can transfer data at whatever speed the data physically rotates past the head. This sequential transfer rate gets higher with each new generation of disk that crams more data into a smaller amount of space. Today, the disk can typically read or write data at speeds of 60 to 100 megabytes per second (faster on the outer parts of the disk where the circle is larger and can hold more data.

The System Optimizes (Up to a Point)

Suppose your program reads in part of a large file, processes it, and then writes it back out. If the computer actually read and then wrote data 4K at a time, then performance would be limited by the seek and latency time as the arm moves from the input to the output file on disk. You could manually improve performance by reading and writing larger blocks of data (one or more megabytes per operation). However, the operating system and drivers should be able to optimize even a sloppy program. The system can distinguish a program that is reading and writing sequentially from one that is doing random access. It can allocate unused memory to cache data, so even when the program is logically doing small I/O operations the OS aggregates the data and turns the requests into large I/O operations with fewer seeks and more data transfer per seek.

A Second Disk is Sometimes Better

If you are reading one large file and writing a second one, then the best performance is achieved when you split the I/O across two disks. The arms on one disk can remain positioned over the input file while the arms on the second disk are positioned over the output file. Then there is very little seek delay and maximum data transfer.

Striping and RAID are Not Always Good

A RAID configuration spreads the data across several disks. Typically a chunk of every file is written to one disk, then the next chunk goes on the next disk, and so on. If the speed at which you can read a file is determined by the speed the data rotates under the arm, two disks can transfer twice as much data in the same amount of time. This means you can read all the way to the end of the file quicker.

If you have a database that is doing random I/O to read and write small amounts of data, so that the typical amount of data processed is smaller than the size of the chunk that is written to one disk before going on to the next disk, then any given random I/O operation will select the disk that contains the chunk that contains the data, and only that one disk will be used.

However, if you do random access of larger blobs of data, then some of this large blob of data will be written on each disk. So all of the disks have to seek to the same relative location on disk in order to transfer the entire blob your program needs. Then when somebody else wants a different blob, all the disks simultaneously move to the new location. The worst case example of this is the start up of a large program. All the disks seek in order to read the program into memory, then they seek to a different location to read data.

If you understand how programs use disks, you may be able to optimize the distribution of files across them. For example, most database systems have large data files and a smaller log file. The data and log files are used in each operation. So performance is optimized if you put the data file on one disk and the log file on a physically separate disk. Then the arms don’t have to seek jumping back and forth between the data and the log. If you combine all your disks into one big RAID array, there is no way to separate data and log.

One of the most widely quoted performance characteristics is totally meaningless. A desktop disk can read data at a maximum rate of 100 megabytes per second. The SATA connection can run at 300 megabytes per second or a new faster 600 megabytes per second. However, spending more money to get a cable that is six times faster than the disk instead of just three times faster than the disk doesn’t make much sense. The disk iteself is the choke point.

PIO means something is wrong

In 1985 the computer transferred data to and from the disk two bytes at a time using Programmed I/O or PIO. For the last few decades disks transfer much larger blocks of data using DMA (Direct Memory Access). However, when a cable is loose or broken at power up time the BIOS can discover that something is wrong and fall back into PIO mode. When this happens, the disk performs very slow and CPU utilization goes way, way up every time you read or write data. Check your connections. If the problem persists, you may have a bad disk.

To verify this is the problem, look in Windows Device Manager at the SATA disk controllers. That’s right, the problem does not show up in the Disk devices but rather in the Disk Controller devices. There are two devices on each controller, and Windows will show you what speed they are running at. If any is in PIO mode, you have a problem.

Server SAS Disks

SCSI is the name of a particular family of connectors and a command set. However, in modern technology SCSI is the type of connection of Enterprise class disks with 4.5 millisecond seek time and 10K or 15K RPM.

The SCSI command set does provide more powerful options for optimization than is available for dumb ATA drives. However, effective use of this command set requires a lot of disks and some fairly powerful system software.

Modern Serial Attached SCSI (SAS) disks use basically the same type of connection as a SATA disk. One pair of wire sends data from the computer to the disk while another pair sends data from the disk to the computer. Different grades of wire can then be used to extend the distance between the computer and the disk so that SAS disk can be a few meters away from the computer. In corporate server rooms, the disks can be in a different part of the rack than the CPU connected to them.

The most valuable part of the SAS architecture is seldom used, particularly when all you do is buy a standard server from Dell. Each SAS disk has two data connectors and has an “address” similar to the address on an Ethernet adapter. Each SAS controller has four or eight connectors and each of those connectors has an address. Now inside a Dell server each disk has one of its two attachments connected to one port on the controller. The effect is almost identical to SATA.

However, just as many computers, printers, and routers can be connected to each other using Ethernet cables and switches, so it is possible to get SAS switches that connect a bunch of disks to a bunch of computers. Physically any computer could in theory talk to any disk, but you configure each computer with the addresses of the disks it should use. Everything works the same until a computer (or maybe just a RAID adapter card) fails for some reason.

Then if you are using the full SAS architecture, a computer may have a second RAID card that can also get to the same disk using a different path. Or if the entire computer goes down, a backup computer can connect to the disks and pick up where the first computer left off. All of this is possible given the SAS architecture, but it is not typically the solution that hardware vendors sell. Instead, they treat SAS disks the same as an older generation of SCSI disks, and if you want to store disks separate from the computer case itself then the vendors would rather sell you some type of SAN device for big bucks rather than a SAS switch.